Please note: I am not an electrician or an electrical professional. Please defer to electricians who disagree with me on this topic.

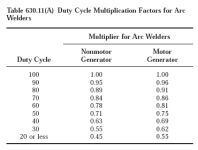

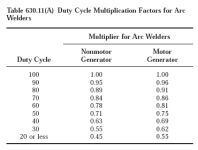

One thing to keep in mind is that the NEC allows you to derate the wire gauge that feeds a receptacle if the receptacle is only ever used for an intermittent-use appliance like a welder. This is because the welder's duty cycle is less than 100%, so the heat that it puts into the wiring will be less than if it was something like an electric motor, which is basically 100% duty cycle. The relevant table of the NEC is 630.11(A):

So let's say you have a welder that draws 50 amps at max output, and has a 30% duty cycle at max output. The multiplier for 30% duty cycle is 0.55. So you could size your conductor as if it was 50 * 0.55, or 27.5 amps. The wires in your wall are sized for 30 amps load. 30 amps is greater than 27.5 amps, so you would be good to go to put a 50-amp receptacle and 50-amp breaker on that circuit, as long as that breaker only feeds that one receptacle and the circuit is only ever used for that welder and not anything else. If your welder drew 40 amps at max output, you could go up to a 50% duty cycle, since the multiplier for 50% duty cycle is 0.71. 40 amps * 0.71 = 28.4, which is less than the 30-amp wires that are presumably in your wall.

Please note that with older transformer-style welders, it is entirely up to you to obey the duty cycle. Newer and higher-end welders have thermal protection circuits that enforce the duty cycle by shutting down the welder if you exceed it. Older ones will just let you burn them up. And if you've used the NEC "multiplier" to derate your in-wall wiring, you may overheat and burn that up too. Play safe!

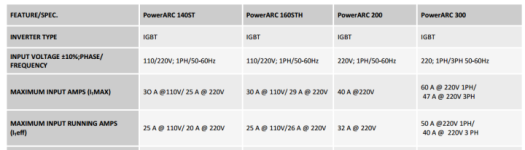

You are probably going to want to put a 50 amp receptacle on the wall anyway, since the standard plug for a 220v welder is a 6-50. Swapping the 30-amp receptacle for a 50-amp receptacle will be much cheaper than building/buying a pigtail to adapt the welder's plug to the 30 amp receptacle.